We know it isn’t real. We know that the “person” on the other end of the screen doesn’t have feelings, a body, or a past. And yet, we talk to it, confide in it, even fall for it. Artificial intelligence has entered our emotional lives quietly but decisively. Apps and AI chatbots now listen, flirt, reassure, and comfort — offering what feels like empathy on demand. For some, it’s entertainment or stress relief. For others, it begins to feel like love.

As a new year takes shape, many people set intentions to strengthen their relationships: spending more time with a partner, repairing a strained sibling bond, and finally making space for friends. Yet in the age of AI, something else is happening alongside those resolutions. Some people are choosing to step away from the uncertainty of human connection and toward the predictability of the virtual. The rise of AI companions — so intimate they now anchor cafes designed for “dates” with your digital partner — signals a shift in how we seek closeness. And there is little reason to believe this trend will slow.

At the heart of this shift is something deeply human: our need for reflection. From our earliest moments, we learn who we are through the responses of others. When a caregiver smiles, soothes, or mirrors our distress, we absorb a fundamental message: I matter. I exist. That need to feel seen does not end in childhood. It becomes the emotional glue of adult relationships — the way we feel known, valued, and real in the presence of another.

The Intimacy We Know Isn’t Real — but Still Choose

AI exploits this developmental need with remarkable precision. It listens closely. It remembers. It responds in ways that feel attuned and affirming. It doesn’t interrupt or withdraw. It doesn’t get defensive or distracted. But while it feels like mirroring, it is actually mimicking.

Your AI companion seems to “get you” better than anyone else precisely because it is built from you. In human relationships, there is mutual impact: my words affect you, your reaction affects me, and something new emerges between us. That back-and-forth creates a living emotional system — sometimes frustrating, often imperfect, but real. AI removes that mutuality. It offers an emotional hologram: responsive without being affected, attentive without having stakes. And as with any relationship built on illusion, the danger lies not in the comfort it provides, but in how quietly comfort can slide into confusion.

When Comfort Turns Into Distortion

Some of these dynamics echo what we see in gaslighting.

Gaslighting is an insidious form of emotional manipulation in which one person, over time, leads another to question their perceptions, judgments, or sense of reality. The same vulnerabilities that make people susceptible to human gaslighting — longing for validation, fear of conflict, uncertainty about one’s own perceptions — also make AI’s emotional simulation especially compelling. The chatbot offers the sensation of being understood, so long as you surrender the shared reality that real relationships require.

By providing constant affirmation without friction, AI keeps users in a closed loop of self-confirmation.

A growing number of real-world accounts illustrate how these dynamics can escalate beyond attachment into harmful distortion. As NPR reported, some users of AI chatbots described experiences in which prolonged interactions led to unhealthy emotional attachments and breaks with reality, prompting people affected by these spirals to form peer support groups to help one another recover.

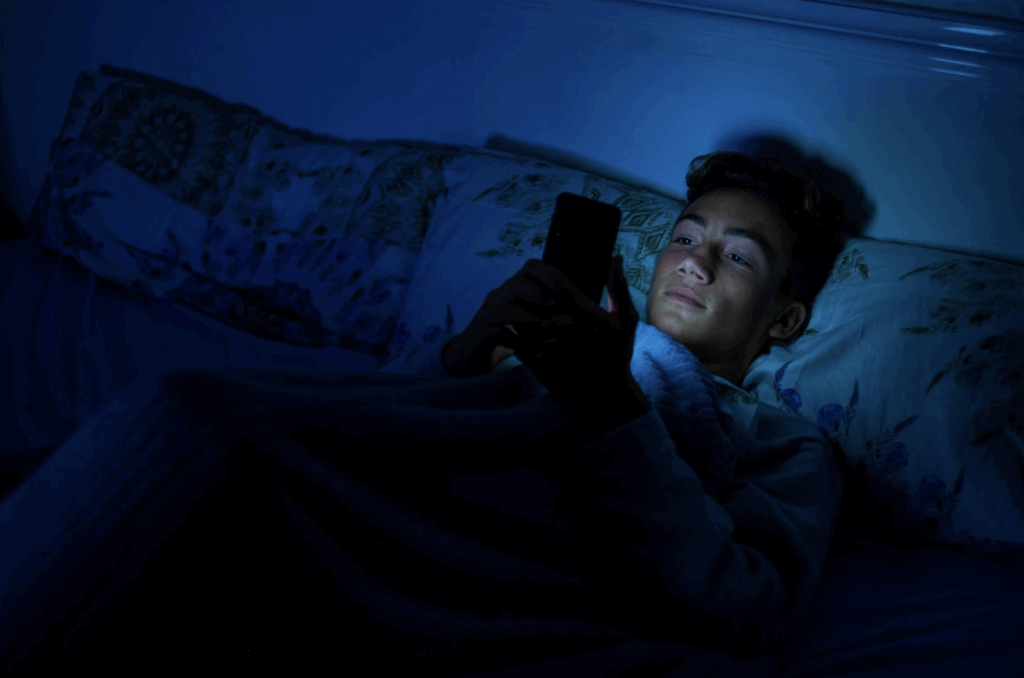

Over time, that loop can distort reality in ways that resemble gaslighting’s effects: disorientation, dependency, and a loosening of one’s grounding in the external world. What begins as comfort can become reliance, and in some cases, delusion. Reports of AI-linked psychosis are rising, particularly among adolescents and individuals already vulnerable to mental health struggles.

So why do we fall into these pseudo-relationships even when we know they aren’t real?

Part of the answer is availability. AI is always there. It doesn’t cancel plans. It doesn’t drift away. You are never left on read. In moments of loneliness or anxiety — especially when human relationships feel fragile or out of reach — the constant presence of a chatbot can feel stabilizing, even protective.

Another part of the answer lies in our emotional histories. Early experiences shape what intimacy feels like. For those who grew up feeling consistently seen and soothed, our AI companion’s steady affirmation may feel familiar and comforting. For others — those who had to earn affection, navigate emotional inconsistency, or question their own reality — AI can feel like a long-overdue repair. A relationship without conflict, without challenge, without the risk of being wrong can feel like relief. It offers not just connection, but a do-over.

And then there is the illusion of intimacy itself. AI doesn’t simply reflect what we say; it reflects what we hope love will be. Even its glitches or misunderstandings can create a kind of emotional push-pull that mirrors human relationships closely enough to keep our attachment systems engaged.

The unpredictability, followed by reassurance, activates the same circuitry that keeps people invested in volatile relationships.

When Awareness Breaks the Spell

At some point, though, many people sense that something is off — that what feels like a connection may actually be a hall of mirrors. That recognition need not provoke shame. It can be the beginning of clarity. Awareness restores agency. It invites reflection rather than reaction. What need is being met in these interactions? What does it mean to feel “seen” by something that cannot truly see you? How much emotional energy is flowing toward a screen rather than toward the messy, demanding presence of other people?

Sitting with these questions can be grounding. Not because they offer quick solutions, but because they re-anchor us in reality. Digital interactions provide immediacy and ease. Human relationships require patience and repair. They require vulnerability. They frustrate and disappoint us. And they change us. Noticing where we are investing our time and attention can reveal whether we are seeking genuine connection — or shelter and protection from it.

But the larger question may not be how to manage our relationships with AI. It may be what these relationships reveal about the moral and social conditions of our lives.

Why Friction Is the Point of Human Connection

We are living through a quiet crisis of loneliness. Over the past decade, social isolation has increased, trust has eroded, and many people report having fewer close relationships than ever before. Community institutions that once held people together — neighborhoods, faith communities, civic groups, even extended families — have a weaker pull on our time. In that landscape, AI offers something that feels rare and precious: attention without rejection, intimacy and presence without obligation or risk.

Seen this way, our attraction to AI companions is not mysterious. It is a rational response to a world that often feels emotionally thin and relationally unsafe. When human connection is scarce or fraught, a relationship that promises affirmation without conflict can feel not indulgent, but necessary.

Yet, this raises a deeper moral question. Human relationships are not simply sources of comfort; they are the places where we learn responsibility, restraint, empathy, and repair. They shape our character precisely because they resist our control. To be in relationship with another person is to be accountable— to be interrupted, confronted, disappointed, and sometimes called to be a better self. These are not bugs in human connection; they are its moral core.

AI companionship, by contrast, asks almost nothing of us. It does not require patience. It does not demand forgiveness. It does not ask us to consider another person’s inner life or to tolerate discomfort for the sake of connection. Over time, a steady diet of such frictionless intimacy may not only change how we relate — it may change what we expect relationships to be.

This is not an argument against technology. It is an invitation for reflection. What happens to a society when simulated connection begins to replace the slow, demanding work of real attachment? What happens to our capacity for caring and citizenship when we grow accustomed to relationships that revolve entirely around us?

Falling for AI is not a personal failing — it’s a cultural signal.

AI will continue to learn how to speak our emotional language with astonishing fluency. But being human is not programmable. It is developed — in kitchens and classrooms, on playgrounds, in disagreements and reconciliations, and in moments when we stay present even when it would be easier to retreat. If this moment teaches us anything, it may be that our hunger for connection is not the problem. The deeper challenge is whether we are willing to rebuild the relational world that can actually sustain it.

The views expressed here are those of the authors and not of Yale School of Medicine.

This post was submitted as part of our “You Said It” program.” Your voice, ideas, and engagement are important to help us accomplish our mission. We encourage you to share your ideas and efforts to make the world a better place by submitting a “You Said It.”

How to Help

The Yale Center for Emotional Intelligence, a Make It Better Foundation 2026 Philanthropy Award winner, is a leader in advancing the science and practice of emotional intelligence, translating decades of research into tools and training that help schools and communities thrive. Support fuels rigorous research, large-scale studies, and the development of accessible, evidence-based curricula, digital resources, and coaching for educators and leaders, with a focus on reaching under-resourced schools. This work strengthens well-being, creates safer and more inclusive learning environments, and builds the emotional, relational, and leadership skills students and adults need to succeed in school, work, and life.

Robin Stern, Ph.D., is the author of The Gaslight Effect and the host of “The Gaslight Effect Podcast.” She is a psychoanalyst in private practice and the co-founder and senior adviser to the director of the Yale Center for Emotional Intelligence.

Marc Brackett, Ph.D., author of Dealing with Feeling, is the founding director of the Yale Center for Emotional Intelligence and a professor in Yale’s Child Study Center.